Tones in the Key of Virtual Space

Abstract

Room acoustics is the phenomenon of how sound performs and interacts with a space. Virtual reality is an emerging technology that enhances a reality experience with an unlimited amount of space. This essay focuses on the interaction between sound and space in virtual reality. Two key areas are explored through this journey: how we hear space and interpret it as a meaningful experience such as music, and how the latest virtual reality experience aids or hinders our perception of sound and space.

After cases study analysis and related industry analysis, I designed a series of room spaces into interactive spatial-acoustic experience centres by using emerging digital tools such as VR. The series of prototypes varied from preliminary to complicated and build up the narrative of my design intent. The final proposal will be a virtual sound architecture where multiplayers could hear how their vocal sound can turn into harmonized tune.

Keywords

Room acoustic, Reverberation, Virtual Reality, Spatial-acoustic experience

Introduction

How to hear the virtual world?

The experience of hearing music and wearing a virtual reality (VR) headset share something in common: the user is completely immersed in the prospective element. It is a dream-like moment where the audience is distracted from where they stand, what time it is, and even who they are. For the music, this feeling of immersion is caused by omnidirectional acoustics which raises emotion as a response. For the VR space, it is caused by blinding out the physical world and creating something new virtually. This comparation shows an interesting outcome: sound acts as a link for user’s engagement with space and vision act as a boundary.

Today, we live in a quickly developing environment in which new technologies are increasingly blurring the lines between the physical and virtual worlds. Among these technologies, virtual reality (VR) and augmented reality (AR) capture the most attention. Some companies which are developing these technologies are Google Cardboard, HTC Vive, Oculus Quest, and much more. Coming away from the specific brands, the effect that the technology generated is from a head-worn display device called “Sword of Damocles”. When worn, this device allows to observe a virtual space computed by 3D graphics. With a simple three-dimensional image and no sound, it endows the early users to enjoy a strong sense of immersion, as a piece of music lose themselves, as a place forget to leave.

Researchers like to use the metaphor of a room to define two new emergent experiences which this device generates. The difference relies on whether or not we turn on the light in the room which we inhabit. If we operate the headset in a dark room, the user could only experience the virtual world. It is called “Virtual Reality” (VR) because the reality experienced was created in a computer. However, if we turn on the light, the virtual space will be overlaid on the background of physical space; This is called “Augmented Reality” (AR) because the physical reality is edited by the virtual reality. A problem has arisen: If the room of the space we inhabit keeps changing, will sound change accordingly? Will our perception of sound change as well? The hypothesis of this thesis is that our perceptions of sound and space could reach a deeper, richer, and more sensitive level while connecting through the embodied experience in Virtual Reality.

To investigate how a space would sound and integrate it into spatial-acoustic experience design, this research contains three parts. The first part focuses on the room acoustics in the physical world. The room with “Sword of Damocles” is dark at this part. In explaining room acoustics in the physical world, I will start with a film episode to illustrate the foundation of hearing space. I will continue with examples of I am sitting in a room (Alvin Lucier), Philips Pavilion (Iannis Xenakis), and finally Aqua Alta to explore the musical perception in relation to sound, space, and media. My early project Canon will be introduced in this part.

Then let us switch on the light of the room while wearing “Sword of Damocles”. This thesis will look at the latest progress in virtual reality and focus on its interaction with architectural design and musical experience. A few VR experiences which try to incorporate spatial-audio is presented and analyzed. Then, I will use my second project Virtual Acoustic Instrument to illustrate my first attempt in designing an acoustic experience in a virtual site.

The last part shows my on-going research project Sound Odyssey. By creating a real-time room acoustic simulator in virtual reality, a series of spatial experiences are combined with the senses of touch, sound, and vision. This prompts a possibility of integrating the spatial-acoustic experience as a digital tool for auralization and acoustic simulation in architecture design.

Part 1 Listen, Space is singing.

How we hear the space: Reverberation as the tone

“Can architecture be heard? Most people would probably say that architecture does not produce sound, it cannot be heard. But neither does it radiate light and yet it can be seen, We see the light it reflects and thereby gain an impression of form and material. In the same way we hear the sounds it reflects and they, too, give us an impression of form and material. Differently shaped rooms and different materials reverberate differently.”[1]

— Rasmussen,1964

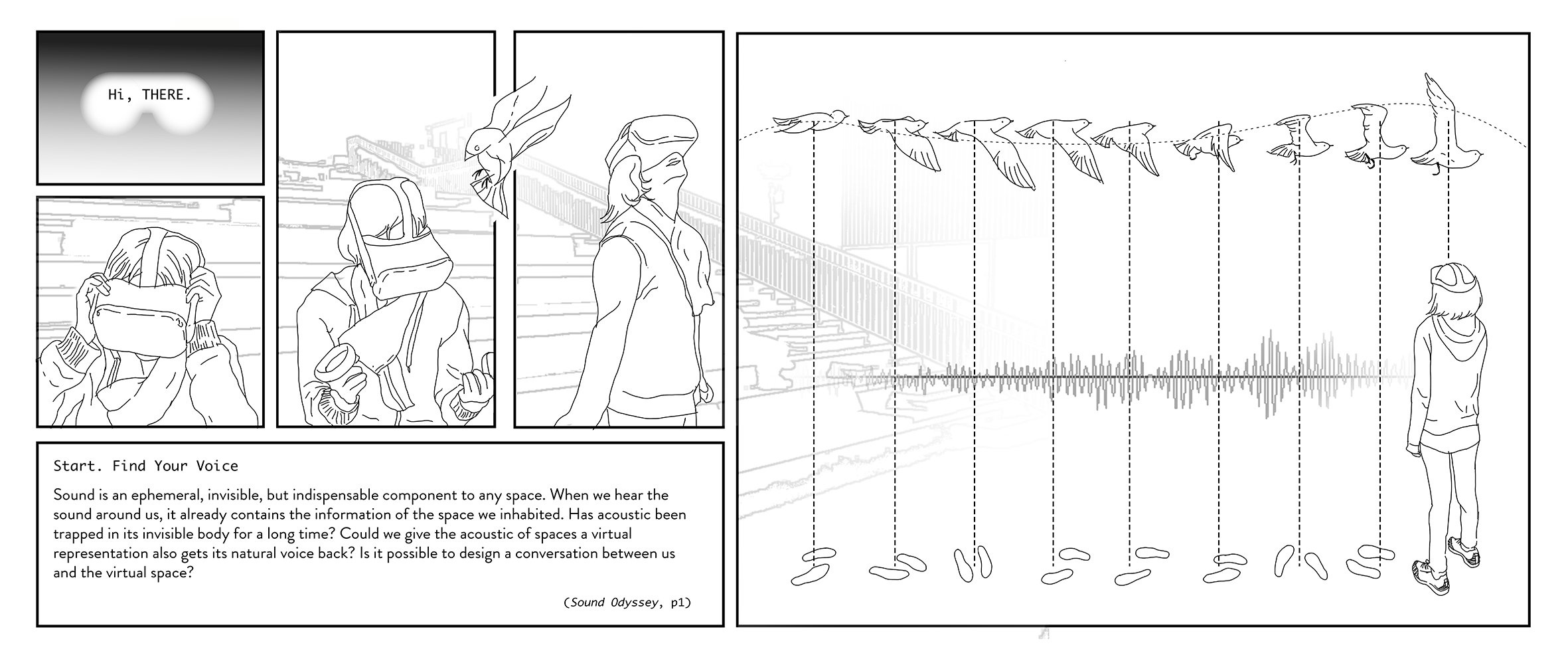

Sound is an ephemeral, invisible, but indispensable component to any space. When we hear the sound around us, it already contains the information of the space we inhabited. Let us imagine a scene: you were standing in an unfamiliar place, you are chasing by enemies, you have to find the way out. There were many branches connect to the exit. however, they were all covered by the darkness. What would you do next?

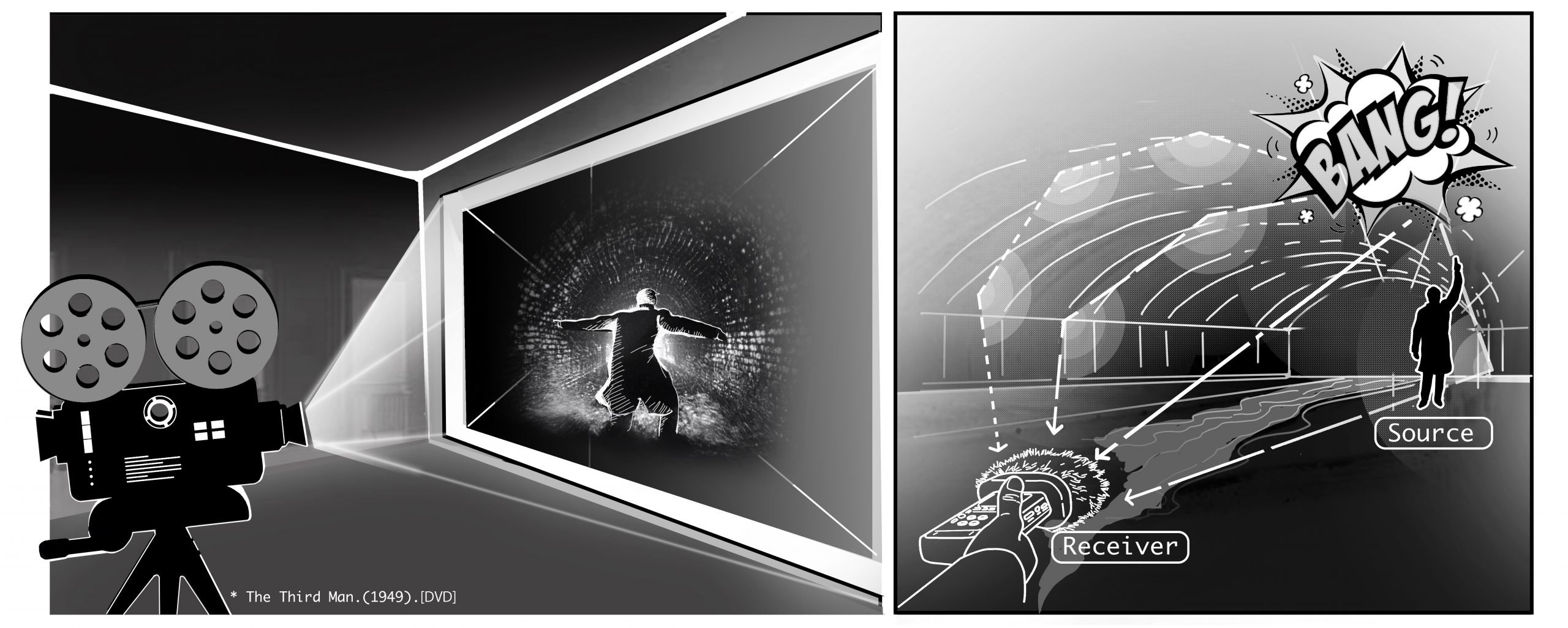

This question is not a pure imagination, it is a classic scene which happened in the movie The third man[2]—Harry Lime is a gangster (played by actor Orson Whelles) who is chased by a group of police in the Vienna sewers. When Harry feels that he is lost in the gloomy underground tunnel, he raises his gun and fires shots in the

air. Steen Eiler Rasmussen described the gunshots in his book, Experiencing Architecture: the “ear receives the impact of both the length and the cylindrical form of the tunnel”[3]. If we compare the four gunshots which happened in the sperate spots of the tunnel, we can witness that the reverberant decay slope changed significantly.

According to the sound engineer handbook Room Acoustics, this slope could be divided into two parts: Early Reflections (ER), Late Reverberation (LR). ER could give us signal for the location of source while LR reveal the geometry of space and how the objects inside space absorb the sound[4]. These rules are firstly revealed by Wallace C. Sabine[5]. Sabine’s Formula is widely used to calculate the reverb time. Reverb time is the time that it takes when a direct sound drops or decays to a certain degree. For example, when sound spreads, the time for the original sound to drop to 60 decibels calls RT60 (or T60). It displayed a positive correlation between the volume of a room with the last of echo and reverbed sound.

RT60≅(0.161s/mV)÷Sa

RT60 is the reverberation time (to drop 60 dB)

V is the volume of the room

Sa is the total absorption in sabins

After the scientific illustration of how we hear the geometry of the space, I would like to interpret this scene as an acoustic performance situated in a past site. The impulse response of the underground tunnel happens to be recorded by film. Each time this episode is played on screen, the audience interacts with the space in the scene via audio rather than video. Although the space is not totally dark, it is actually sound which describes the view of the space.

With the existing audio convolution technology, we could use the gunshots, containing absorption and reflection detail, to rebuild the sonic space acoustically now. Ivan Doknanic’s research contributes an algorithm that could reconstruct a convex polyhedral room from a few impulse responses[6]. In effect, it is possible to reconstruct this tunnel space by the shots theoretically.

As sound response could reveal location and geometry, it could also offer a more intimate interaction with us compared with visions. Juhani Pallasmaa in his book, The Eyes of the Skin, argues that a space could be read through its sound as well as its visual shape. He also compares the difference between two perceptions: vision is directional, whereas sound experience is omni-directional; sight implies exteriority, whereas sound is interiority; sight is solitary, whereas hearing creates the sense of connection and solitary[7]. Hearing the built environment colors the experience and understanding of space.

Minimal Music: I am sitting in a room, Alvin Lucier

Although we could describe the gun battle in the sewer of Vienna as a spatial acoustic experience because it revealed the geometry of the space, for most people it is hard to link this phenomena to musical experience. The most obvious reason is that the sounds of a room is hidden and trivial. We can perceive sound phenomenon like reverb time, but it’s difficult to measure it as an object, especially before we were able to record it. When recording technology emerged, people got the opportunity to grasp invisible and inaudible sound. Sound became a medium of art. Its relation to space and body was reconstructed. The most famous and profound sound art experiment is I am Sitting in the Room.

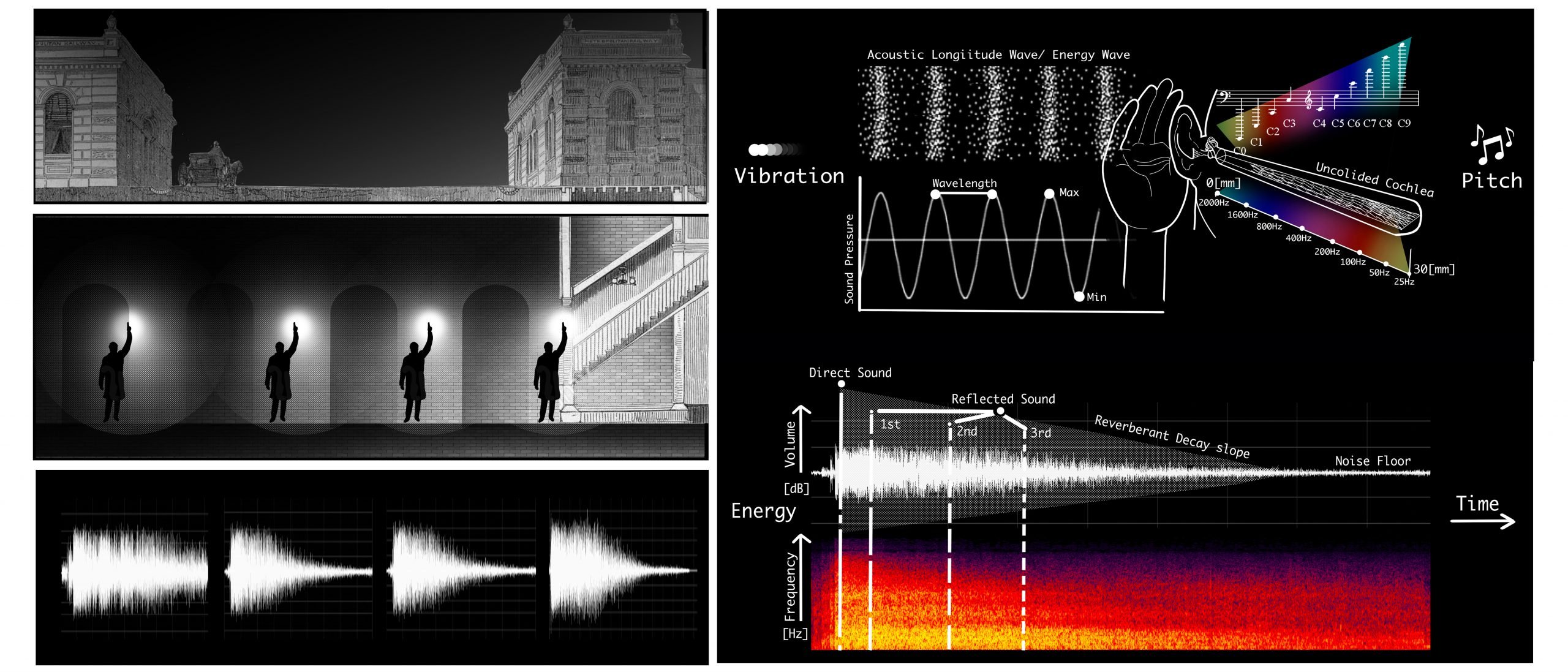

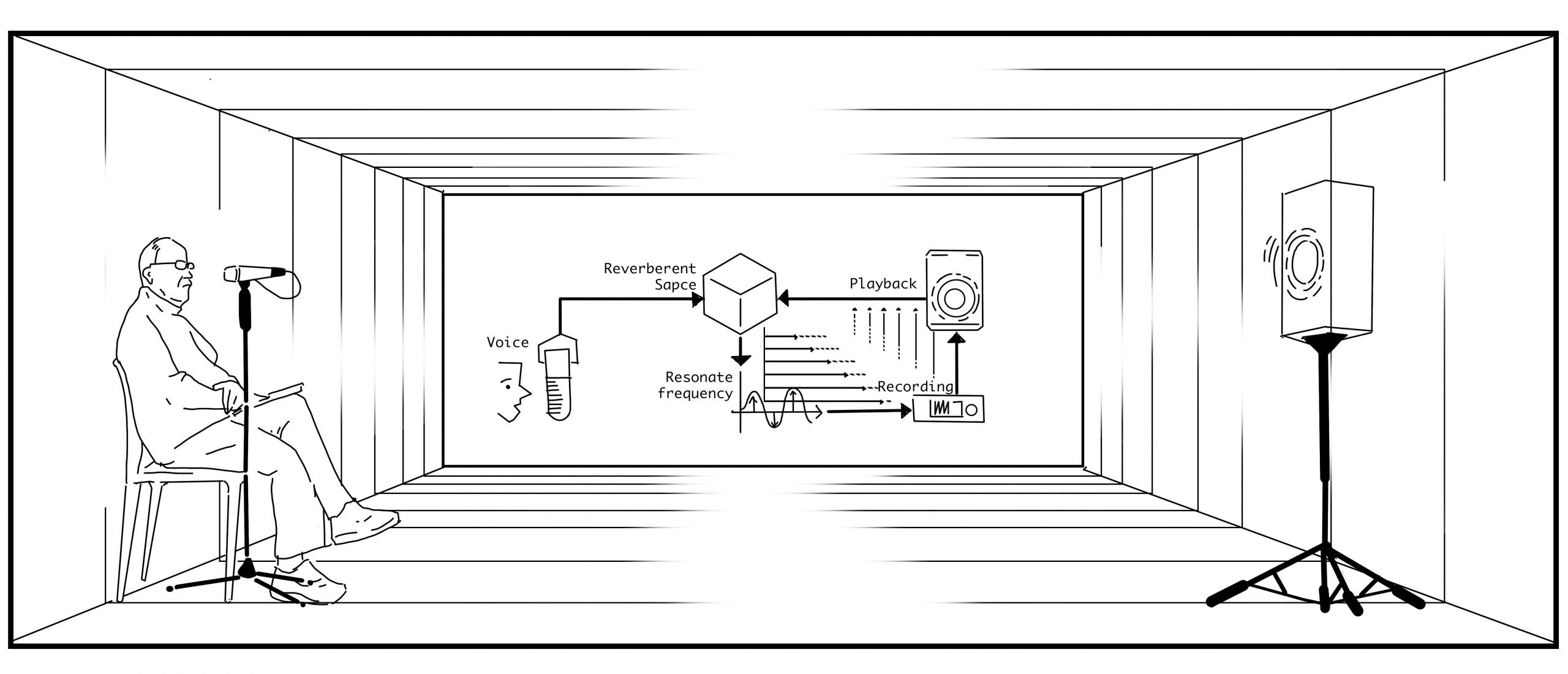

I am Sitting in a Room is an experimental music performance made by Alvin Lucier in 1969. Lucier used his slightly stuttering voice to describe how his voice would be recorded and replayed in the same space repeatedly. During this process, the audience can hear the changes in his speech becoming more muddled, distant, and mixed with specific sonic frequencies[8].

“I am sitting in a room different from the one you are in now. I am recording the sound of my speaking voice and I am going to play it back into the room again and again until the resonate frequencies of the room reinforce themselves so that any semblance of my speech, with perhaps the exception of rhythm, is destroyed. What you will hear, then, are the natural resonant frequencies of the room articulated by speech. I regard this activity not so much as a demonstration of a physical fact, but more as a way to smooth out any irregularities my speech might have.[9]”

—Lucier, quoted in Collins 1990,my emphasis

Lucier’s text also reveals another unique point of this experience; it is self-directed and self-repeated. The experiment is a simple feedback loop being recorded on a technological media that, in effect, turns invisible sound reflection into a musical perception. “Repetition is inevitably a process of creative reconstruction”[10].In other words, this action of self-directed and self-repetition makes the temporal and omnidirectional sound become solitary and directional as a vision.

After going through acoustic-spatial experiences via the recording world, there is still a question remaining to be answered: what’s the difference between an acoustic experience and musical experience?

When we label a specific composition of sound as ‘music’, we are expressing a perception of experience. The process of listening to music is not only a sound receiving behavior, but also a mental presentation progress. According to a theory of Embodied Cognition, our perception of music is closely connected to our “sensory systems—auditory, visual, haptic, as well as movement perception”[11]. Marc Leman’s hypothesis is that every technology could be treated as an extension of the human body. For musical instruments and their multimedia replacement, they are “natural mediator between musical energy and mental representation”[12]. This thesis will not focus on explaining the mental representation process into detail. The main purpose of this discussion is to find a method to perform spatial sound musically. To continue this discussion, I will research its intersection with architecture design.

Formalized Music: Philips Pavilion and Metastasis

“A composition is like a house you can walk around in”

–John Cage

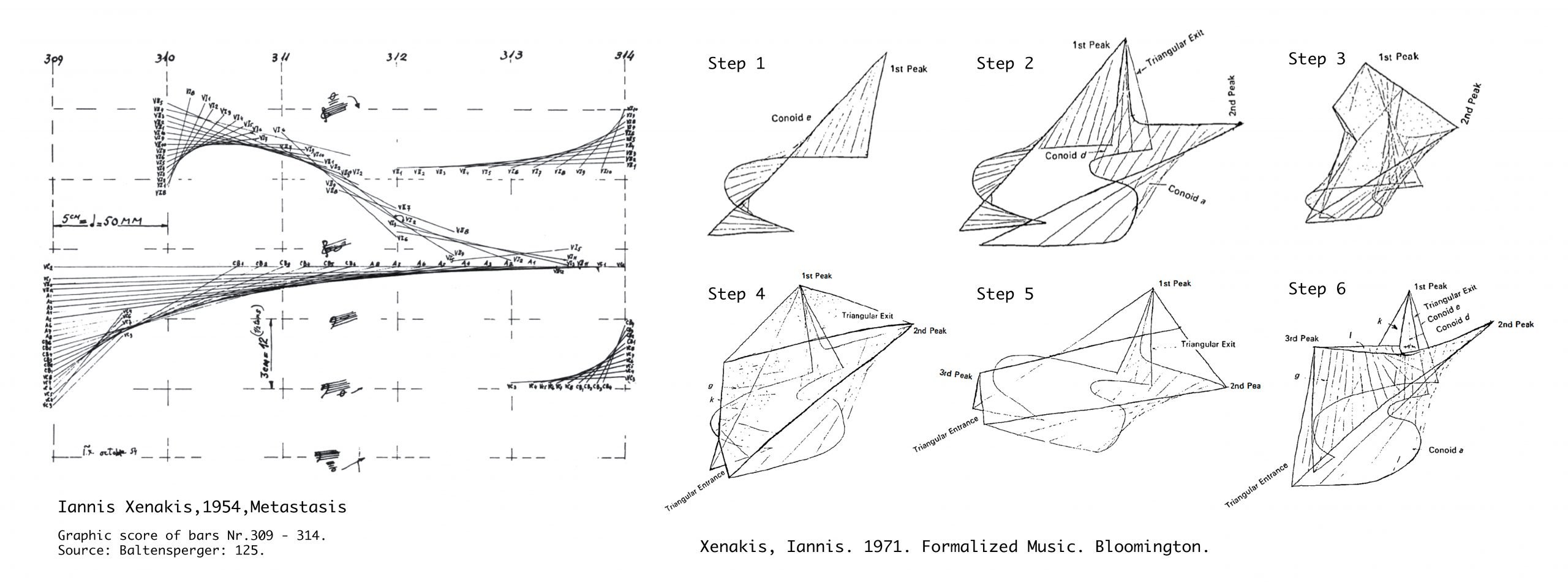

Philips Pavilion is designed by Le Corbusier’s office for the 1958 expo in Brussels. The aim of the project is to show the most advanced technology achieved by the Philips company. Le Corbusier was asked to design the building, but the main concept part is developed by Iannis Xenakis. He is both an architect and composer. He initiates a new term– formalized music, which means applying mathematical constructs into musical composition. What differentiates Philips Pavilion from other architecture is that Xenakis expresses music through the rhythmic proportion of the façade. Xenakis prefers to emphasize the dynamic quality in its structure. The sculpture-like shape of Phips Pavilion is a variation of his musical score Metastasis.

This building could be regarded as one of most impressive music architecture in the 20th century. However, it has some drawbacks in the perspective of spatial-acoustic experience. The interior acoustic space wasn’t as well designed as its façade. Although this building is not mainly designed for music performance, the acoustic quality is decreased because of the choice of architectural material. The walls are filled with asbestos which is porous and absorbs sounds to a great affect. Consequently, there is no delicate designed interaction between the sounds of space and its dynamic façade which already gives visitors the imagination of sound.

Polyphonic Music: Acqua Alta in Venice

“When I see architecture that moves me, I hear music in my inner ear.”

— Frank Lloyd Wright, Comment made in a conversation with Eric Mendelsohn in 1924

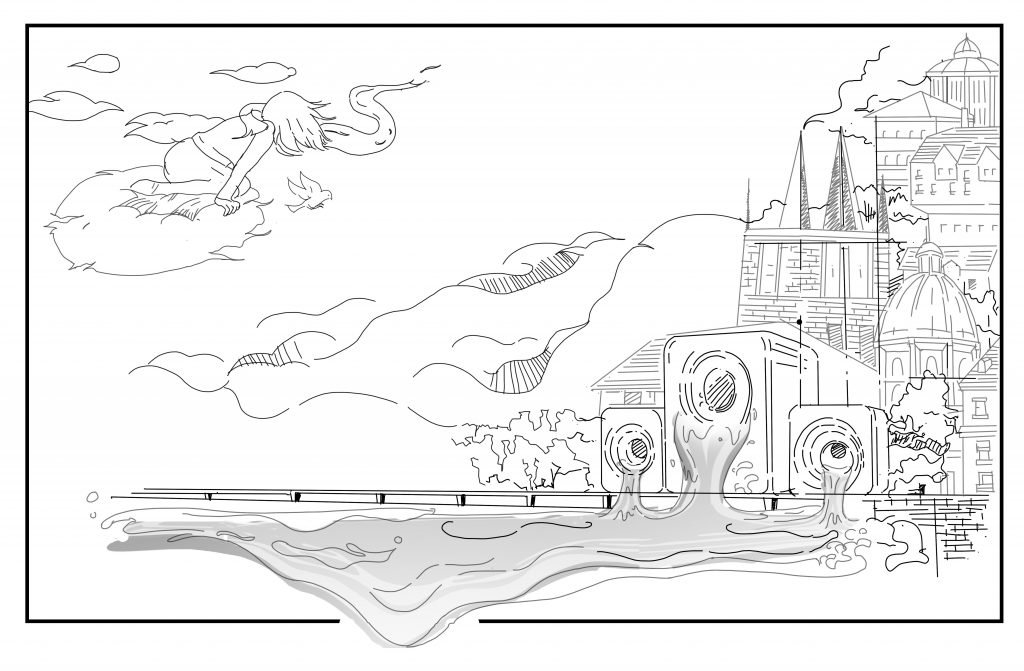

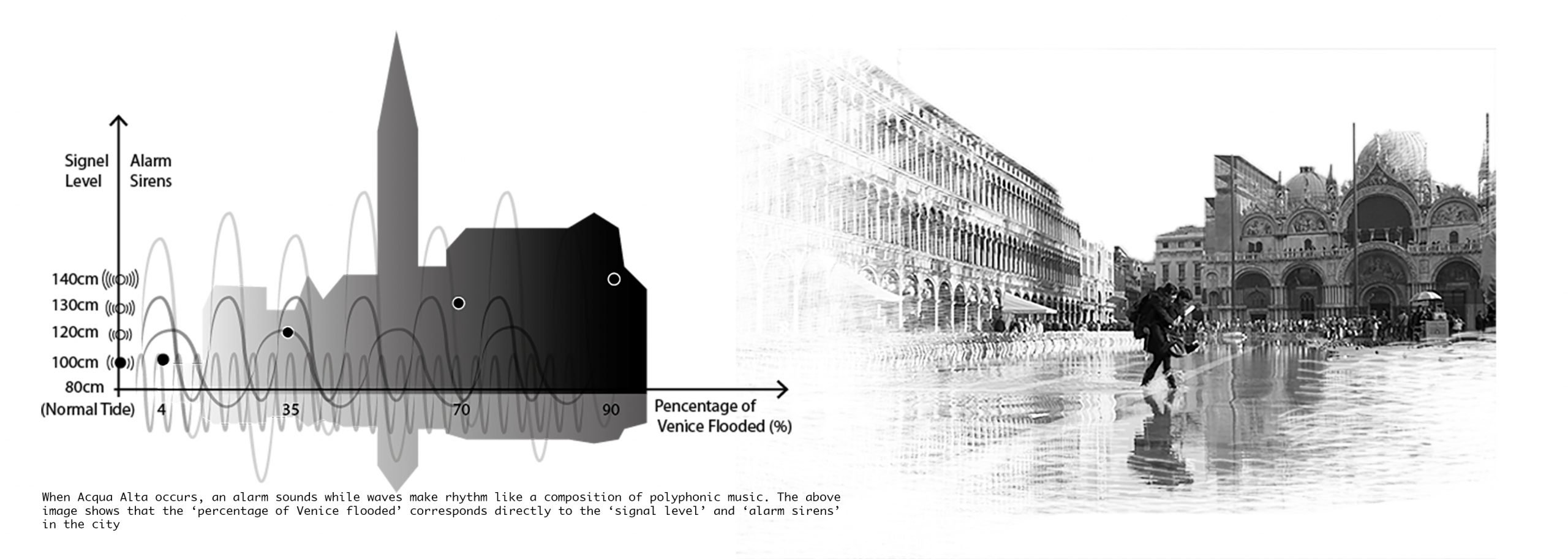

Acqua Alta, which means ‘high water’ in Italian, is a tidal phenomenon in Venice. With the cooperation of the moon and a hot dust-laden wind originating from the Libyan Desert, the sea level rises and causes Venice to be flooded. To forecast this event, alarm sirens are triggered when the tide reaches certain levels. For example, when the tide level reaches 1.10 meters, one long sound will be played throughout the city. When it reaches 1.20 meters, two sounds which crescendo are played[13]. With the growth of the water level, the alarm sirens increase gradually. The city gets enveloped by flooding water and the accompanying reactive sounds. In this moment, inhabitant’s senses are immersed with sound, wind, and water along its narrow streets. This immersive effect is a product of Venice’s urbanity, weather, history, and its solution to a flooding problem which has plagued the city for decades. The soundscape and landscape interweave with each other here. This visual acoustic experience makes Venice like a piece of polyphonic music which is composed by nature and human together.

Project Canon

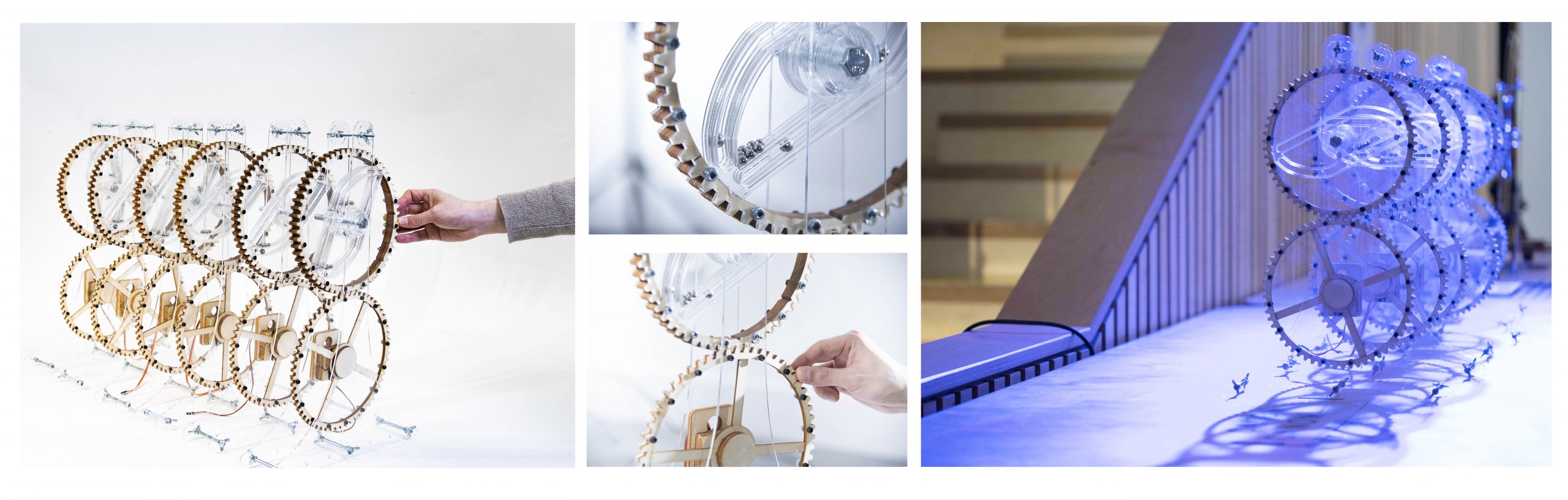

Canon is an attempt to decode emergent space into music composition to create the feeling of emersion. Inspired by how music structure has been underlying in spatial perception, repeated music phrases generate sounds and visual patterns at the same intervals. The machine is shown in the corresponding figure.

By its literal definition, “canon” is a compositional technique for creating musical harmony by repetition of a similar melody with time delay. In this installation, the canon is reinterpreted as an emergent kinetic musical instrument. It is a six-layered kinetic sculpture making rhythmical percussion sound by its own motion. The motion of each kinetic sculpture is triggered by the present position of a body walking through space over time. In this way, it generates overplayed emergent percussion sound, motion, and interacts based on the location of participants.

When we perform this prototype, I find visitors prefer to treat Canon as a dynamic sculpture rather than a series of emergent spaces. This is because the scale of the installation is hard to be referred to the space in which they stand. An immersive environment is the precondition of creating a spatial-acoustic experience successfully. The most portable and low-cost method of doing this is designing a musical experience in Virtual Space.

Part 2 Virtual Reality and Virtual Acoustic

“If the task of the display is to serve as a looking-glass into the mathematical wonderland constructed in computer memory, it should serve as many senses as possible. So far as I know, no one seriously proposes computer displays of smell, or taste. Excellent audio displays exist, but unfortunately we have little ability to have the computer produce meaningful sounds.”[15]

–Ivan Sutherland, The Ultimate Display, 1965

Overview

The Ultimate Display was a bombshell for the Virtual Reality research field when it was firstly issued in 1965. In this article, Ivan Sutherland noticed the unbalanced developing trend among our basic senses. Vision have gained too much research focus. However, the other senses such as smell and touch have been placed in a neglected position. “Excellent audio displays exist”, he points out, “but unfortunately we have little ability to have the computer produce meaningful sounds.”

Over 50 years have gone by and this phenomenon that Sutherland criticized still continues in VR industry. When we review the VR experience in the field of architecture, it becomes more significant. With a well-designed, high resolution VR headset, architects like to use it to provide 3D representation for the design. SYMMETRY[16], ARQVR[17], VRTISAN[18] and many homogenized products compete in the real-time 3D architectural visualization area

Next, we shift our discussion to the virtual acoustic research area. The audio sense related technique and algorism developed rapidly. Take the room acoustic related algorism into account: the Image Source Method(ISM)[19] and the Ray tracing method[20]. They have been widely used in simulation modeling. Most of these techniques have been an integrated part of the spatial audio software development kit (SDK) to serve the simulation of geometry-responding sound interaction in Virtual Reality. These techniques have frequently been used in the VR gaming industry and contribute to the immersive 3D sound environment.

Now, we turn to another intersection where VR interacts closely with audio: the music industry. 360-degree video technology is the most widely used virtual reality music experience products. Many companies and individuals use it to make 360-degree music videos. With choreography and smart editing, 360-degree music could help the audience get a more immersive sound experience compared with the common static video. Musicity 360 and Sensation of Sound is the representative of these products.

Case 1 Musicity 360

Musicity was released by ‘Musicity and Culture Mile’. This project is using 360-degree video as a medium to represent pieces of architectural experiences with added music. The designers choose three locations at the Barbican Center in London for users to explore. Each location was recorded using a 360-degree camera. In addition, new music which matched the local architectural philosophy was added and edited as a sound track animation. Furthermore, the real location view and unreal music animation were merged when a user experienced it via VR headset[21]. In this digitally enhanced method, the designers tried to blend the boundary of the real world and the music world.

The most significant weakness of this experience is that people can only get the scene from a static point. This is because the 360-degree videos are not computer-generated content. They are real-life views filmed on wide-angel lenses from a central point and then spherically stitched together to form the environment. If users rotate their head, they will get a responsive change in the image from the headset, but if they walk close to the object in the scene, the distance between them will not change. Also, the perspective of the camera cannot change in a 360-degree film. This leads to the music experience in Musicity to be more responsive rather than immersive.

Case 2 Sensation of Sound

Sensation of Sound is also a 360-degree video experience. Although it can’t offer complete interactive and immersive experience as a lot of the other 360-degree video products after it. It increases its emersion quality by letting the character tell her story in first person perspective. Also, it raises the observers resonate from a deeper mind.

The story describes a 20-year-old deaf girl named Rachel Kolb expresses how her understanding of sound and music in her life. When Rachel received special implants that enable her to have partial hearing, Rachel describes the sense of ‘music’ (the composed song) in her ear as “flat, muted, muffled, and far away”. Her own musical experience comes from knocking piano keys with her hand, embracing a guitar soundboard’s reverberation to her chest, and walking around while counting steps like a metronome[22]. In this film, the sense of music is also expressed by the language Rachel used. Rachel starts her narrative with a silent voice and hand gestures at the beginning of the film. When the theme of music become deeper, her stuttering voice and graffiti-style animation come out. The transition of body language and vocal language become the implied proof of the existence of rich musical experience existing her in daily life.

Case 3 The Urban Palimpsest

The Urban Palimpsest is a research project made by IA lab at University College of London in 2016[23]. It combines 3D scanning and binaural recordings to replicate visions and ambient sound of a real site, and then reconstructs it in virtual reality. People could react to the scenes with motions and observe the heightened objects which contained stories or emotion in the virtual cite to reach a new understanding about of the original, real scene. It displayed a virtual reality which may not appear as “real” as a physical space. Since the real space is recorded via 3D scanners, the reconstructed space keeps the basic geometry. The virtual space was formed by a numerous flow of particles which are symbolic of the energy particles of objects and sounds. This visual effect could be regard as a real attempt to blur the boundary between landscape and soundscape in real time. The purpose of this project is to aid urban planning practices. Virtually reality is not only used as a toy for novel experience but also has potential to be used as a historical document for spaces which may no longer exist in the future.

Project Virtual Architectural Instrument

The research related to virtual reality (VR) and virtual acoustic (VA) brings me back to the boundary of “virtual” and “real”. My first VR attempt is the virtual construction of an architectural instrument.

This project is a virtual representation of the previous project, Canon. I use HTC VIVE and Unity to build a simple virtual space. The space has two parts: a round gold ceiling and harp-like walls which consist of dozens of cylinders. Utilizing scripts, I map the collision sounds on each cylinder. The pitch of collisions varies according to the length of the cylinder. When user use controller hit the façade, a sequence of sounds will be generated (refer to the figure below).

Although, in this prototype, new spatial-acoustic experience are created via VR space. Nevertheless, during the process, I used existing sound of pluck strings and assigned it to the architectural component. I am using existing songs to describe something unreal, which in turn, could lose the original sound of the space itself. To recover and reconstruct the “real” sound of space and its response, I start my final project: Sound Odyssey.

Part 3 Sound Odyssey

Design Intention and iteration

To find the virtual embodiment of room acoustics, I started Sound Odyssey. It is a series of immersive acoustic spaces modeled in virtual reality which is experienced via an Oculus Quest headset. I used it as a testbed to explore how to design the acoustics in a virtual representation of a room and aiding the acoustic spatial experience in architecture design. Sound Odyssey contains three generative prototypes: Falling space, I am sitting in a virtual room, and Re:Philips Pavilion.

Sound Odyssey aims bridge between a user and the virtual space which they inhabit. The dynamic acoustic spatialized simulation and a whole series of senses mix with virtual environment which I included in this research. I used the first prototype to prove that there are new ways to look at the auditory sensation in virtual reality. In later experimental research, I will change the geometry and volume of the space to observe whether it could create something new; A new configuration of space will lead to different phenomenon of reverberation and echo in the physical world as well as in virtual space. How people could engage with this new virtual, acoustic, spatial experience is a major interest in this next stage.

Prototype I Falling Space

The aim of this prototype is to prove that virtual acoustics could be reconstructed via VR and bring people new experiences. As a conventional acoustic experience happens in a static space—a room that stays the same volume—I questioned what a dynamic space would sound like. The experience is designed as follows: I built a 3D cathedral model in a virtual scene, placed audio which collected in a de-reverb and de-noise environment as an auto play source; then I linked the sound reverb time with the cathedral model’s scale parameter; lastly, I animated the scale of space gradually. This process is programed in Unity and built as an android app installed in Oculus Quest. A Bluetooth headphone is connected with the headset to reduce interference of surrounding sound as preparation before the experience.

When a user enters the virtual site, the reality headset and accompanying headphones convey the visual and acoustic transition of the cathedral, which is synchronously changing in scale. In the environment, the user can hear a voice singing continuously. Meanwhile, the cathedral unfolds from a three-dimensional model to a two-dimensional image. When the cathedral is folded like a paper on floor, she can hear the vocal sound as dry and flat because there is no walls nor ceiling to reflect sound. When the cathedral extends to a three-dimensional model, she can notice that the extruding surfaces gives the audio hard, long, reverberating tones which fit our recognition of the acoustic environment in a real cathedral because of how the sound reflects off of surfaces. The spatial acoustic experience beyond the physical world is a dynamic transition of reverberation: the reverb appears, decay-time is extended, an echo appears, the echo vanishes, decay-time is decreased, and finally the sound returns to dry and flat.

When visitors experienced this prototype a project fair, many of them interacted with the virtual space through movement. When the ceiling of cathedral raises, most visitors raise their head. When it falls, they bent their head. Some participants even squat down and screamed when the ceiling falls. Also, when the experience is completed, some participants told me that it “looks/sounds like Game of Thrones”. Neither the cathedral’s image nor the audio source comes from that television series. I assume it is caused by the dynamic change in the visualization of the stone cathedral and the heavy reverberated voice. This collaboration recalls their memory of fantasy story rather than a real cathedral (a real cathedral will never change in this way). These feedbacks confirm the success of constructing a virtual interactive acoustic space. However, there is a lot of room to develop this acoustic interaction more accurate and persuasive.

Prototype II I am Sitting in a Virtual Room

To overlay the impact into the physical world, I followed the classic acoustic experiment by Alvin Lucier, I Am Sitting in a Room. Whereas Lucier sat in a static room, the virtual room is animated; changing geometry and volume with time.

In this prototype, I added more real-word inputs with architecture design tools for auralization. A series of regular and irregular geometry spaces are modelled in Rhino (three-dimensional design program) and run for acoustic date simulated with Pachyderm individually. Pachyderm is a grasshopper plug-in which works in Rhino and uses convergence algorithms for auditory calculation. After receiving the matrix of reverb data and organizing it with space data, a curve reveals the corresponding relationship between space and its generated acoustic properties. I used it as the benchmark to ensure the output of Unity corresponds with real-world acoustics.

In addition, this prototype uses the built-in microphone of a headset as audio input in real time. Since the last prototype used prepared audio in scene, people could only receive the sound response passively. With the microphone integrated in the virtual auditory system, people could interact with the space using their own voice. According to user’s feedback, more subtle changes of reverbs is perceived according to this development.

Another improvement aspect of this experiment is that the scene of the virtual room is designed to augment its connection to physical space. When a user wears the headset and enters into the VR world, he will see a door frame and a chair. Actually, the same door frame and the chair is settled at the exact same position responding on the coordinates of physical world. By touching the door and siting on the fixed chair the user could use tactile properties as an assistive tool to measure and perceive the virtual space.

Prototype III Re: Philips Pavilion

For the last prototype of Sound Odyssey, I decided to upgrade the interactivity and emersion level of the spatial acoustic experience and attempt to integrate it into VR architectural design experience. As mentioned previously, Philips Pavilion has a remarkable dynamic image of facade with a defective interior acoustic design. Therefore, I choose the Philips Pavilion as my virtual site, to reconstruct it visually and acoustically.

The sound environment of this building will be constructed and simulated with the same workflow of the last prototype. To make this virtual auditory system as live as our physical acoustic environment, multiple players will be allowed to walk in the same VR space. As Oculus Quest doesn’t currently obtain the ability of sending data to another VR device, HTC Vive’s tracker and space station will be used as a communication system. Once the communication network for streaming audio and exchanging the other user’s position is complete, the acoustic simulation for multiple audio sources and receivers won’t be the biggest challenge. I shall then design a set of visualized sound particle systems which both follow the basic rules of physical acoustics and their aesthetic character. If all these parts work, Re: Philips Pavilion will establish a conversation between the architecture which has gone and the occupant in present through time, place, imagination, and memory.

Conclusion

My research started with the observation of room acoustics. In the beginning of the design phase, I treated sound as a phenomenon, and tried to figure out how sounds of a space could endow occupants a musical experience. As I researched deeper, I found that the sounds of space and space of sound bond so closely that they could be seen as having great interactivity. In other words, room acoustics embodied the message of space, vision, and audio. Rather than creating unexciting novel spatial acoustic experience in this world, I felt that it was more meaningful to give this sound phenomena a virtual representation and prove it could help individuals in deepening their perception of space and have potential to aid architectural auralization in the future. That is the design intention of my final project Sound Odyssey.

During my design practice, my focus shifted whilst the rich design potential of acoustics was exposed during my research of the relationship between sound, space, and media. In my first and second projects. My focus was creating a musical experience by creating a new space, irrelevant as to whether this experience was embodied in physical space or virtual space. When it comes to Sound Odyssey, I decided to leave the music intention alone temporally. I designed the first prototype to bring the authentic voice of space back and envision its parameter of volume in virtual world. As an unexpected result, the first porotype proved it could arouse participant’s emotional response –which is the foundation of all musical experience. Therefore, in the second prototype, I map the model with more parameters to allow for more geometry changes and add more senses including tactile and vision to enrich the experience. The next prototype will deepen these senses and prompt a more interactive auditory system to aid virtual and architectural experiences.

Through this journey, the virtual space generated form nothing and the experience in a series of geometry changes gradually achieved the ultimate goal of a sound architecture. The levels of immersion and interactivity of the virtual experience also increased gradually. Sound Odyssey has a double meaning. For the design project, it is a journey about how virtual acoustics are being generated, developed, and how they’re being embodiment in a virtual world. For this essay, it is a detoured voyage for mapping questions and developing prototypes. As we start with the question in introduction: How to hear the virtual world? We still have no answer. However, this question pushed me towards the direction of a conventional sound experience; to explore its virtual presentation in the immense area of physical acoustics, sound art, architecture design, and the VR industry.

Until now, the initial question still has no response. If we continue to ask it again and again, we could hear our own voice. We have heard it so deeply, that is actually not heard at all.

[1] Rasmussen, “Experiencing Architecture. p.224”. (Cambridge: MIT Press, 1964).

[2] Reed, Carol. 1949. The Third Man. DVD. United Kingdom: London Films.

[3] Rasmussen, “Experiencing Architecture. p.224” (Cambridge: MIT Press, 1964).

[4] H. Kuttruff, Room acoustics. SPON Press, London, UK, 4th edition, 2009.

[5] Bannon, Michael, and Frank Kaputa. 2015. “Sabine’s Formula & Modern Architectural Acoustics”. Thermaxx Jackets. https://www.thermaxxjackets.com/sabine-modern-architectural/.

[6] Dokmanic, I., Parhizkar, R., Walther, A., Lu, Y. and Vetterli, M. (2013). Acoustic echoes reveal room shape./Proceedings of the National Academy of Sciences/, 110(30), pp.12186.

[7] Juhani Pallasmaa, The Eyes of the Skin (Chichester Wiley, 2014).

[8] Martha Joseph, “MoMA | Collecting Alvin Lucier’s I Am Sitting in a Room,” Moma.org (MoMA, January 20, 2015), https://www.moma.org/explore/inside_out/2015/01/20/collecting-alvin-luciers-i-am-sitting-in-a-room/.

[9] Martha Joseph, “MoMA | Collecting Alvin Lucier’s I Am Sitting in a Room,” Moma.org (MoMA, January 20, 2015), https://www.moma.org/explore/inside_out/2015/01/20/collecting-alvin-luciers-i-am-sitting-in-a-room/.

[10] Olivier Julien, Christophe Levaux, and Hillegonda C Rietveld, Over and Over Exploring Repetition in Popular Music (Bloomsbury Publishing Inc, 2018), 67.

[11] Marc Leman, Embodied Music Cognition and Mediation Technology (Cambridge: Mit Press, 2008), 141.

[12] Ibid.

[13] Daniela Visit, “High Tide Venice Flood Acqua Alta Venice Venice Flooding Forecast,” Visit-venice-italy.com, 2019, https://www.visit-venice-italy.com/acqua-alta-venice-italy.htm.

[14] Durant Imboden, “Acqua Alta (Venice Flooding),” Europeforvisitors.com, 2019, https://europeforvisitors.com/venice/articles/acqua-alta.htm.

[15] Sterling, Bruce. 2009. “Augmented Reality: “The Ultimate Display” By Ivan Sutherland, 1965”. WIRED. https://www.wired.com/2009/09/augmented-reality-the-ultimate-display-by-ivan-sutherland-1965/.

[16] “SYMMETRY”. 2019. SYMMETRY. https://www.symmetryvr.com.

[17] “Arqvr – Guided Virtual Reality Experiences”. 2019. Arqvr – Guided Virtual Reality Experiences. http://arqvr.com.

[18] VRtisan Architectural Visualization. 2016. Video. https://youtu.be/dXI8Z-tu1PY.

[19] J. B. Allen and D. A. Berkley, “Image method for efficiently

simulating small-room acoustics,” The Journal of the Acoustical

Society of America, vol. 65, no. 4, pp. 943–950, 1979.

[20] A. Krokstad, S. Strom, and S. Sørsdal, “Calculating the acoustical room response by the use of a ray tracing technique,”

Journal of Sound and Vibration, vol. 8, no. 1, pp. 118–125,1968.

[21] Nick Luscombe, “MxCM – Musicity 360 – MusicityGlobal,” MusicityGlobal, May 17, 2019, https://www.musicityglobal.com/mxcm-musicity-360-order-without-monotony/.

[22] Rachel Kolb, “Opinion | Sensations of Sound: On Deafness and Music,” The New York Times, November 3, 2017, https://www.nytimes.com/2017/11/03/opinion/cochlear-implant-sound-music.html.

[23] Torisu, Takashi, Haavard Tveito, and John Beaumont. 2019. “Palimpsest”. Interactive Architecture Lab. http://www.interactivearchitecture.org/lab-projects/palimpsest.